Hey Guys,

I am trying to use the VC to run multiple experiments after another and there are two occasions when I get the HTTP 504 error.

The first is presumably a bug and occurs when I call vc._VirtualCoach__get_available_files() while the csv_records folder has many items in it.

Output is:

[...]

Input In [8], in computeIterationServer_singleThread(inputs, CAMfunction, blacklist, instanceLimit, sleeptime, maxTries, osimPath)

144 lastData = None

146 print(f'STEP 5.1: Get CSV file list...')

--> 147 csv_files = vc._VirtualCoach__get_available_files(experiment_id=params["exp_id"],file_type="csv")

148 keys = natsort.natsorted(csv_files.keys())[-requestedInstances:] #the last N names

150 print(f'STEP 5.2: Read CSV files...')

File ~/nrp/src/VirtualCoach/hbp_nrp_virtual_coach/pynrp/virtual_coach.py:624, in VirtualCoach.__get_available_files(self, experiment_id, file_type)

620 response = requests.get(self.__config['proxy-services']['files'] % \

621 (experiment_id, file_type,), headers=self.__http_headers)

623 if response.status_code != http.client.OK:

--> 624 raise Exception('Error when getting files Status Code: %d. Error: %s'

625 % (response.status_code, response))

626 files = json.loads(response.content)

627 distinct_runs = defaultdict(dict)

Exception: Error when getting files Status Code: 504. Error: <Response [504]>

The error occurs reliably/reproducible for a full csv_records folder and it disappears after emptying the folder manually though the web-interface.

As my script/approach is based on many short runs with different parameters I cant really avoid generating many csv files. Emptying the folder manually is rather un-practical (especially when running the script over night).

As a workaround, is there a way to delete files from the vc? I only found functions to read files. In the long run, however it would be nice to not have this error occur at all or to have a possibility for some temporary CSV output. I really only need the CSV output once after the run. Do I overlook some existing solution for this case? Do you have hints/ideas on that?

The second situation where the HTTP 504 occurs is when I call vc.launch_experiment() multiple times.

To explain when this happens, I would dive a bit into the actual script as the error does not occur right at the start but after some time.

I do multiple iterations of the following steps:

- (Re)create VC instance via

vc = VirtualCoach(...) - Launch N experiments on N different available servers after each other via

sim = vc.launch_experiment(...)

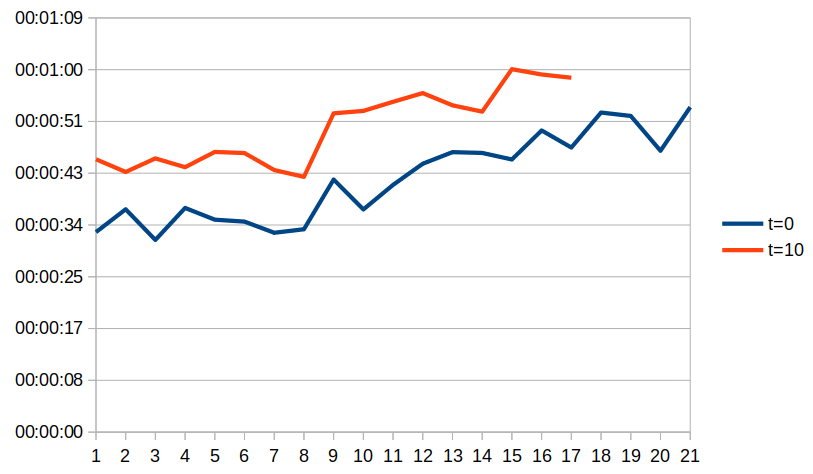

- vc.launch_experiment() blocks for ca. 25-45 seconds. As I use a for-loop, these calls are not made in rapid succession.

- I save the resulting sim objects in a list for later usage

- For each experiment, change the individual simulation parameters in the transfer functions with

sim.get_transfer_function(...)andsim.edit_transfer_function(...) - Start all simulations (

sim.start()) using for loop - Check the state of each simulation (

sim.get_state()) until they are all finished and then stop them (sim.stop()) - (Re)create VC instance via

vc = VirtualCoach(...) - Get list of CSV files (

vc._VirtualCoach__get_available_files(...)) and read the last N files. - Some task specific computations on the csv data

One of these iterations runs just fine (if the csv_records folder was emptied beforehand) in the most cases. Even more potentially run fine. However, after some time (e.g. somewhere between 3 and 30 runs) sim = vc.launch_experiment(...)in step 3 throws and http 504 error.

The logging output before the Exception is:

[...]

INFO: [2022-08-30 21:44:38,625 - VirtualCoach] Retrieving list of available servers.

INFO: [2022-08-30 21:44:38,699 - VirtualCoach] Checking server availability for: prod_latest_backend-7-80

INFO: [2022-08-30 21:44:38,700 - Simulation] Attempting to launch nrpexperiment_robobrain_mouse_0_0_0_0_0 on prod_latest_backend-7-80.

ERROR: [2022-08-30 21:45:28,798 - Simulation] Unable to launch on prod_latest_backend-7-80: Simulation responded with HTTP status 504

Traceback (most recent call last):

File "/home/bbpnrsoa/nrp/src/VirtualCoach/hbp_nrp_virtual_coach/pynrp/simulation.py", line 158, in launch

raise Exception(

Exception: Simulation responded with HTTP status 504

The exception output:

---------------------------------------------------------------------------

Exception Traceback (most recent call last)

[...]

Input In [8], in launchSequential(vc, params, requestedInstances, availableServers, sleeptime)

94 print(f'[{i}]: Try to launch {params["exp_id"]} on {server}...', end = ' ')

95 try:

96 #vc = VirtualCoach(environment='http://148.187.149.212', oidc_username='ge69yed', oidc_password="1qayxc1qayxcTUM")

97 #time.sleep(10)

98 #availableServers=getAvailableServer(vc,blacklist)

99 #server=availableServers[0]

--> 101 sim = vc.launch_experiment(params["exp_id"],server=server) #[NRP issue]: somthimes this fails.

102 sims.append(sim)

103 success = True

File ~/nrp/src/VirtualCoach/hbp_nrp_virtual_coach/pynrp/virtual_coach.py:289, in VirtualCoach.launch_experiment(self, experiment_id, server, reservation, cloned, brain_processes, profiler, recordingPath)

286 logger.info(e)

288 # simulation launch unsuccessful, abort

--> 289 raise Exception('Simulation launch failed, consult the logs or try again later.')

Exception: Simulation launch failed, consult the logs or try again later.

The logging output afterwards:

INFO: [2022-08-30 21:47:59,238 - roslibpy] WebSocket connection closed: Code=1006, Reason=connection was closed uncleanly ("peer dropped the TCP connection without previous WebSocket closing handshake")

INFO: [2022-08-30 21:47:59,239 - twisted] <twisted.internet.tcp.Connector instance at 0x7f61250e4370 disconnected IPv4Address(type='TCP', host='148.187.149.212', port=80)> will retry in 2 seconds

INFO: [2022-08-30 21:47:59,239 - twisted] Stopping factory <roslibpy.comm.comm_autobahn.AutobahnRosBridgeClientFactory object at 0x7f612007ec10>

INFO: [2022-08-30 21:48:02,090 - twisted] Starting factory <roslibpy.comm.comm_autobahn.AutobahnRosBridgeClientFactory object at 0x7f612007ec10>

INFO: [2022-08-30 21:48:02,221 - roslibpy] Connection to ROS MASTER ready.

[a bunch of times the above 5 lines]

INFO: [2022-08-30 21:58:05,099 - roslibpy] WebSocket connection closed: Code=1006, Reason=connection was closed uncleanly ("peer dropped the TCP connection without previous WebSocket closing handshake")

INFO: [2022-08-30 21:58:05,099 - twisted] <twisted.internet.tcp.Connector instance at 0x7f61250e4370 disconnected IPv4Address(type='TCP', host='148.187.149.212', port=80)> will retry in 2 seconds

INFO: [2022-08-30 21:58:05,100 - twisted] Stopping factory <roslibpy.comm.comm_autobahn.AutobahnRosBridgeClientFactory object at 0x7f612007ec10>

INFO: [2022-08-30 21:58:07,117 - twisted] Starting factory <roslibpy.comm.comm_autobahn.AutobahnRosBridgeClientFactory object at 0x7f612007ec10>

INFO: [2022-08-30 21:58:07,279 - twisted] failing WebSocket opening handshake ('WebSocket connection upgrade failed [401]: Unauthorized')

WARNING: [2022-08-30 21:58:07,280 - twisted] dropping connection to peer tcp4:148.187.149.212:80 with abort=True: WebSocket connection upgrade failed [401]: Unauthorized

[repeating the above 6 lines forever]

If you want/need I can also provide the actual source code.

Some context:

- I run the virtual coach in the latest NRP docker image

- Leaving out step 6. did not change anything

- Putting sleep statements between 0 and 30s after each step did not help either

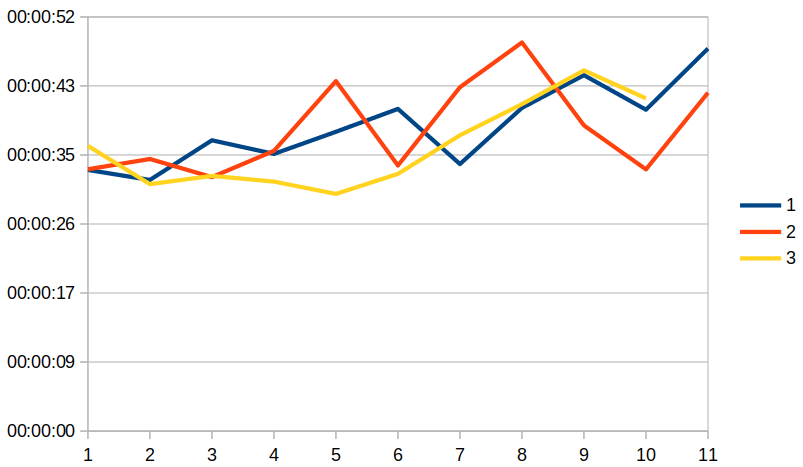

- This error occurs no matter how big N is (amount of “parallel” simulations) -> only for N=2 or N=3 it works for some reason

- The N servers I use are normally the same. So the first simulation of iteration 1 is normally on the same server as the first simulation of iteration 2.

- I did not see a pattern of servers which a prone to causing this error. Every time its a different one and none seem unaffected.

The reason why I do not simply make one full run of a simulation after each other ( also allowing to use get_experiment_run_file for the CSV) is that I intend to replace the for loop of blocking launches in step 1 with actual (thread)parallel launches. Real parallel utilisation of multiple server would greatly speed up my approach. However, when calling vc.launch_experiment() in only two (N=2) parallel threads (delay of 2s) on the same vc object, it is a certain guarantee to get a HTTP 504 error. The output before the exception looks like this:

[...]

[t=0]: Try to launch nrpexperiment_robobrain_mouse_0_0_0_0_0 on prod_latest_backend-7-80...

INFO: [2022-08-31 12:30:55,367 - VirtualCoach] Preparing to launch nrpexperiment_robobrain_mouse_0_0_0_0_0.

INFO: [2022-08-31 12:30:55,368 - VirtualCoach] Retrieving list of experiments.

[t=1]: Try to launch nrpexperiment_robobrain_mouse_0_0_0_0_0 on prod_latest_backend-7-443...

INFO: [2022-08-31 12:30:57,368 - VirtualCoach] Preparing to launch nrpexperiment_robobrain_mouse_0_0_0_0_0.

INFO: [2022-08-31 12:30:57,369 - VirtualCoach] Retrieving list of experiments.

INFO: [2022-08-31 12:31:04,642 - VirtualCoach] Retrieving list of available servers.

INFO: [2022-08-31 12:31:04,768 - VirtualCoach] Checking server availability for: prod_latest_backend-7-80

INFO: [2022-08-31 12:31:04,769 - Simulation] Attempting to launch nrpexperiment_robobrain_mouse_0_0_0_0_0 on prod_latest_backend-7-80.

INFO: [2022-08-31 12:31:06,045 - VirtualCoach] Retrieving list of available servers.

INFO: [2022-08-31 12:31:06,097 - VirtualCoach] Checking server availability for: prod_latest_backend-7-443

INFO: [2022-08-31 12:31:06,098 - Simulation] Attempting to launch nrpexperiment_robobrain_mouse_0_0_0_0_0 on prod_latest_backend-7-443.

ERROR: [2022-08-31 12:31:54,868 - Simulation] Unable to launch on prod_latest_backend-7-80: Simulation responded with HTTP status 504

Traceback (most recent call last):

File "/home/bbpnrsoa/nrp/src/VirtualCoach/hbp_nrp_virtual_coach/pynrp/simulation.py", line 158, in launch

raise Exception(

Exception: Simulation responded with HTTP status 504

ERROR: [2022-08-31 12:31:56,199 - Simulation] Unable to launch on prod_latest_backend-7-443: Simulation responded with HTTP status 504

Traceback (most recent call last):

File "/home/bbpnrsoa/nrp/src/VirtualCoach/hbp_nrp_virtual_coach/pynrp/simulation.py", line 158, in launch

raise Exception(

Exception: Simulation responded with HTTP status 504

The actual exception message looks like this:

---------------------------------------------------------------------------

RemoteTraceback Traceback (most recent call last)

RemoteTraceback:

"""

Traceback (most recent call last):

File "/usr/lib/python3.8/multiprocessing/pool.py", line 125, in worker

result = (True, func(*args, **kwds))

File "/usr/lib/python3.8/multiprocessing/pool.py", line 48, in mapstar

return list(map(*args))

File "<ipython-input-8-eedb071500b0>", line 38, in launchServer

raise e

File "<ipython-input-8-eedb071500b0>", line 31, in launchServer

sim = vc.launch_experiment(params["exp_id"],server=server) #[NRP issue]: somthimes this fails.

File "/home/bbpnrsoa/nrp/src/VirtualCoach/hbp_nrp_virtual_coach/pynrp/virtual_coach.py", line 289, in launch_experiment

raise Exception('Simulation launch failed, consult the logs or try again later.')

Exception: Simulation launch failed, consult the logs or try again later.

"""

The above exception was the direct cause of the following exception:

Exception Traceback (most recent call last)

[...]

Input In [8], in launchParalell(vc, params, requestedInstances, availableServers, sleeptime)

64 #RUN threads

65 with Pool(requestedInstances) as p:

---> 66 runOutputs = p.map(launchServer, args)

68 #only use the runs where the server started sucessfully

69 sims = []

File /usr/lib/python3.8/multiprocessing/pool.py:364, in Pool.map(self, func, iterable, chunksize)

359 def map(self, func, iterable, chunksize=None):

360 '''

361 Apply `func` to each element in `iterable`, collecting the results

362 in a list that is returned.

363 '''

--> 364 return self._map_async(func, iterable, mapstar, chunksize).get()

File /usr/lib/python3.8/multiprocessing/pool.py:771, in ApplyResult.get(self, timeout)

769 return self._value

770 else:

--> 771 raise self._value

Exception: Simulation launch failed, consult the logs or try again later.

Whether the parallel thread 504 error and the for-loop serial launch 504 error are related I do not know.

It would be great if some of you have some ideas how to fix or circumvent this 504 error. Are there maybe some other projects which (successfully) use multiple servers (in parallel) in succession?

Thanks in advance,

Felix