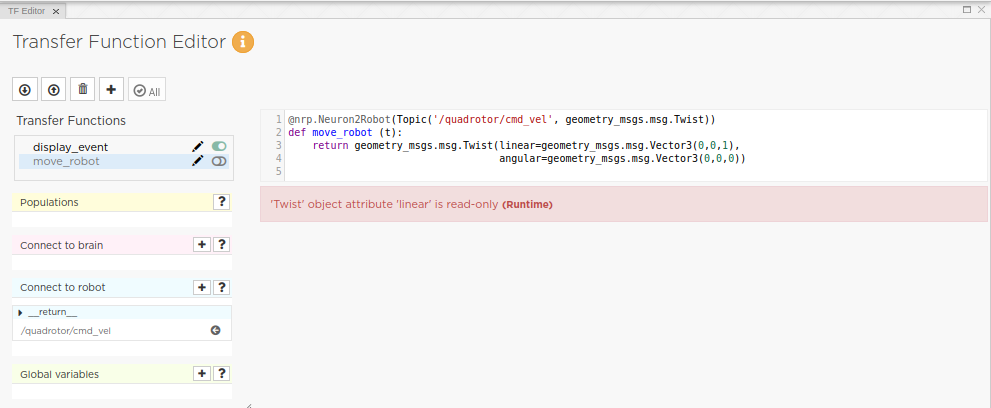

Here is a template experiment with a Hector drone, an attached DVS camera and a transfer function that displays the output of the camera.

To set up the experiment :

Rename both attached files with .zip

Import the zipped experiment (droneDVS.zip)

Install the drone plugin in the GazeboRosPackage, following those instructions : https://github.com/RAFALAMAO/hector_quadrotor_noetic (if you have the docker install, you need to do this step in the backend container)

In order to control the drone, you need to add a namespace in your plugin : in the package hector_quadrator_noetic/hector_quadrator/hector_quadrator_controller, you need to add the namespace quadrator before the namespace

controller, in the controller.yaml in the params folder. In the launch folder, replace the controller.launch content by :

<launch>

<rosparam file="$(find hector_quadrotor_controller)/params/controller.yaml" />

<node name=“controller_spawner” ns=“quadrotor” pkg=“controller_manager” type=“spawner” respawn=“false” output=“screen” args="

controller/twist"/>

</launch>

Don’t forget to build and source the drone plugin you installed.

You also need to import the drone model in the NRP : extract quadrotor_model.zip in the NRP/Models and execute copy-to-storage.sh script which is in the same folder. If you have the docker install, you must do this in the backend container, and you must also go in the frontend container, go to NRP/nrpBackendProxy, and run : npm run update_template_models.

The drone is static by default, you can change this and other properties (including the DVS camera parameters) in quadrotor/model.sdf in the experiment. The drone can be controlled with twist topics, and need to be raised first to engage motors ( the z linear component of the twist topic has to be positive).

droneDVS.pdf (58.5 KB)

quadrotor_model.pdf (245.2 KB)